It's 7:30 PM on a Thursday. Your last patient left two hours ago. You're staring at six undocumented sessions, trying to remember which patient said what. Your partner texted an hour ago asking when you're coming home. You haven't responded because you're still typing notes from Tuesday.

Sound familiar? Here's the uncomfortable truth: documentation is killing the therapeutic work you trained years to do. Every minute you spend typing "patient appeared anxious" is a minute you weren't fully present when that patient was sitting across from you, deciding whether to trust you with their trauma.

AI medical scribes promise to fix this. But after testing five different platforms across 40+ psychiatry and therapy practices internationally, I discovered something critical: most AI scribes are built for cardiologists and orthopedists. They fail spectacularly in therapy settings.

This guide will show you:

- Why standard medical AI completely misses therapeutic conversations

- The 5 specific features that matter for mental health (and the 4 "impressive" features that don't)

- How to test an AI scribe in 10 days and know if it's worth keeping

- What mental health professionals actually need (hint: it's not what vendors are selling)

If you're tired of staying late to finish notes, or worse typing during sessions and breaking eye contact with patients, this is for you.

The Core Problem: Medical AI Doesn't Understand Therapy

Standard AI scribes are trained on cardiology appointments and orthopedic consultations - quick visits with clear symptoms and straightforward documentation. Therapy operates in an entirely different universe.

Therapeutic language is invisible to medical AI

When you write "patient showed resistance through deflection when discussing family dynamics," medical AI outputs: "Patient discussed family." You've lost the intervention, the pattern you observed, and the clinical significance.

CBT thought restructuring, psychodynamic interpretations, EMDR processing, DBT skills - none of this therapeutic language exists in standard medical models. They capture "patient presents with chest pain" not "patient's affect flattened when discussing father, suggesting unprocessed grief."

Silence carries as much clinical weight as words

That 15-second pause after "What happened next?" isn't dead air. It's your patient deciding whether to trust you. The shift from animated to quiet speech signals you touched a defensive wound. These moments guide your next intervention.

Medical AI sees silence as background noise to eliminate. In therapy, pauses are often where breakthroughs happen.

Privacy stakes are exponentially higher

General medicine: blood pressure medication, sprained ankle. Mental health: suicidal ideation, unreported trauma, substance use violating probation, affairs ending marriages, intrusive thoughts about children.

Mental health patients worry about custody battles, job termination, criminal charges, family exile, religious shame. The moment they see recording equipment without clear privacy assurances, trust fractures.

Risk documentation can't wait for your review time

It's 4:15 PM. Your patient mentions passive suicidal ideation. You conduct a 20-minute risk assessment and safety planning. They leave at 4:35. Your next patient arrives at 4:30.

When do you document that risk assessment? Tonight when exhausted? Tomorrow morning? It needs to be captured immediately in a structured format you can review in under a minute. If that patient presents to an ER tonight, your afternoon documentation becomes legally critical. Standard medical AI buries risk content in paragraphs. You need it flagged and structured.

What Mental Health Practices Actually Need From AI Scribes

Forget fancy features. Here's what actually matters:

Invisible During Sessions

The therapeutic alliance is your primary tool. Research shows eye contact and presence predict outcomes better than technique alone.

If you're thinking about the AI during a session, you're not present with your patient. The technology must disappear completely. No laptop barrier. No speaking unnaturally. No stopping to check if it's recording.

Test: If you can't forget the technology exists, it's wrong for therapy.

Captures Process, Not Just Problems

Medical notes: "Patient reports depression."

Therapy notes: "Patient used distancing language when discussing mother ('she was fine'), recognized this pattern when reflected, connected it to current relationship avoidance, significant progress in awareness."

Your AI must capture interventions used, patient responses, emerging themes, resistance patterns, progress toward goals, and what you're tracking for next session. That's the difference between transcription and clinical documentation.

Absolute Privacy Guarantees

Mental health patients share substance use that could violate probation, unreported trauma, suicidal thoughts, relationship conflicts that could end marriages. These are life altering secrets requiring absolute protection.

Your AI needs: HIPAA-level encryption, local data compliance requirements, clear data residency, no permanent audio storage, and access restricted to treating clinician only.

You must explain this confidently in 30 seconds when patients ask "Is this really private?"

Understands Therapeutic Modalities

Psychodynamic sessions document transference patterns. CBT sessions note cognitive distortions. EMDR tracks bilateral stimulation targets. Group therapy requires documenting multiple perspectives.

Your AI should adapt to how you practice, not force every session into a generic medical template. Look for therapy specific templates or easy customization.

Skip These "Features"

Don't pay extra for:

- Deep EHR integrations that break with updates

- Analytics dashboards you'll never use

- Voice commands requiring syntax memory

- Endless customization needing multiple training sessions

How Different Documentation Methods Compare

| Approach | What You Gain | What It Costs | Reality for Therapists |

|---|---|---|---|

| Type notes during session | Free, immediate documentation | Destroys presence, patients feel ignored | Therapists sacrifice therapeutic alliance to stay current on charts |

| Document from memory later | Full presence in session | Exhausting, details fade, risk documentation suffers | Clinicians stay 2-3 hours after last appointment |

| Hire a human scribe | Accurate, adapts to your style | Destroys intimacy, expensive, patients won't share freely | Nearly impossible to find qualified mental health scribes |

| Dictate after each session | Faster than typing | Still requires reconstructing sessions from memory | Doesn't solve the real problem |

| Ambient AI Scribe | Automatic, affordable, preserves presence | Quality varies, must understand therapy language | Best option IF built for mental health documentation |

For mental health practices, AI scribes offer the best balance - if they actually understand therapeutic documentation.

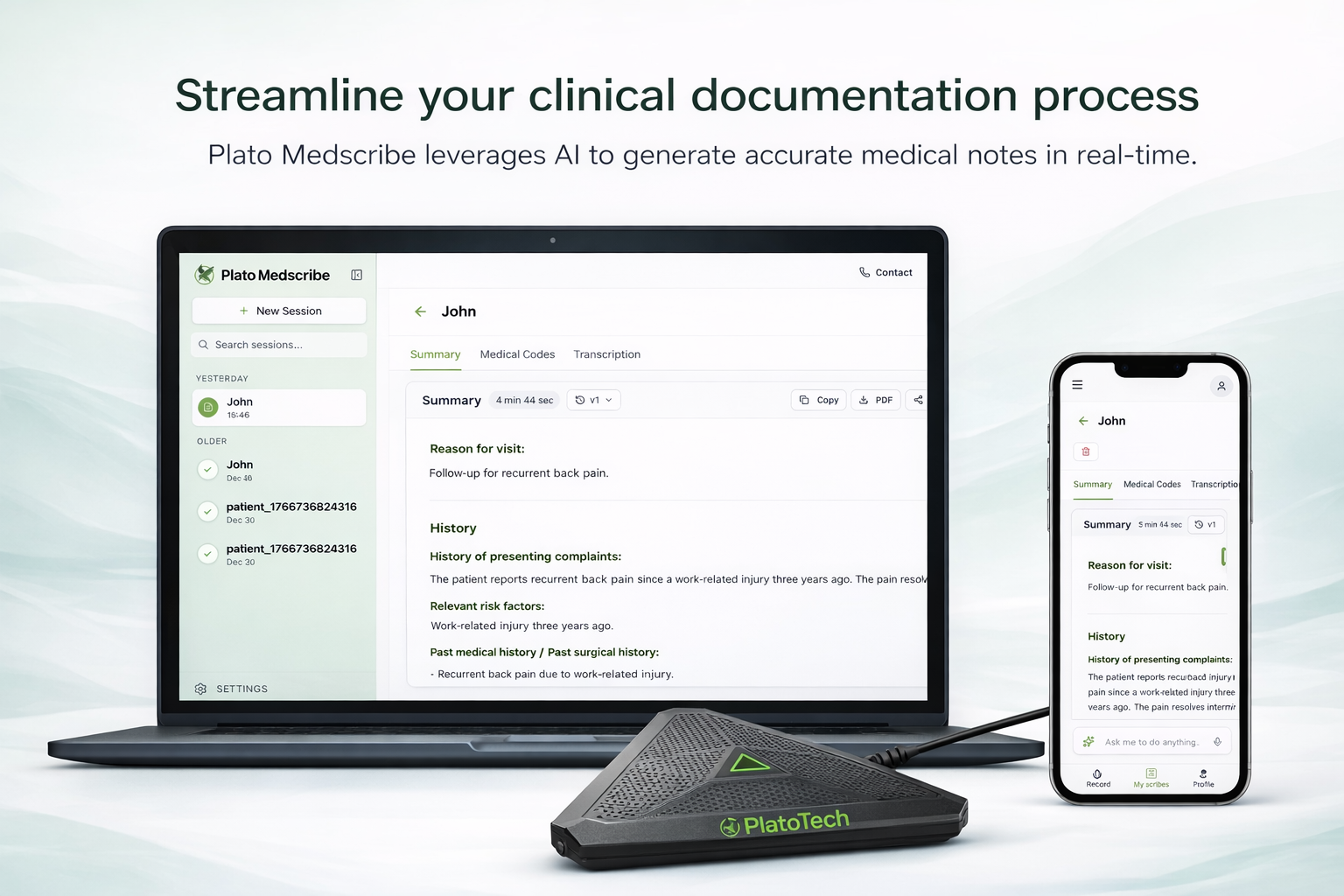

Mental Health AI Scribe Comparison

Capability comparison based on pilots across psychiatry and therapy practices:

| Capability | Plato Tech | Suki AI | DeepScribe | Nuance DAX |

|---|---|---|---|---|

| Trained on Therapy Language | Yes | Limited | No | No |

| Multilingual Support | Excellent (98 languages) | Basic | Limited | Limited |

| Therapy-Specific Templates | CBT, DBT, Psychodynamic, EMDR, Group | Generic | Generic | Generic |

| Risk Assessment Structuring | Auto-flagged & formatted | Basic | Basic | Basic |

| Free Recording Hardware | Yes (Plato Echo) | No | No | Sold separately |

| No Audio Storage Default | Yes | Unclear | No | Varies |

| Data Compliance | HIPAA, GDPR, regional standards | HIPAA | HIPAA | HIPAA |

| Monthly Cost / Clinician | Starting at $89 | ~$399 | ~$349 | ~$400+ |

| Setup Time | <5 min | ~30 min | ~45 min | ~1 hour |

Key takeaway: For mental health practices needing therapeutic language understanding, multilingual support, absolute privacy, and therapy specific features, Plato Tech offers capabilities other platforms don't match at this price point.

Testing AI Scribes in Your Practice: The Smart Approach

Start small. One or two therapists, two weeks maximum. Choose clinicians who are frustrated with documentation and open to trying new tools. Don't roll out enterprise-wide until you have proof.

- Test with complexity, not simplicity. Skip the simple intake forms. Test with intensive therapy sessions, crisis assessments, and multilingual patients. If it handles your hardest cases, everything else is manageable.

- Zero workflow changes. Therapists conduct sessions exactly as normal. The AI adapts to them, never the reverse. If they need to change how they speak or structure sessions, it's the wrong solution.

- Have a privacy script ready. "I use an AI assistant for notes so I can give you my full attention. Nothing is permanently recorded. Only I see the summary. Comfortable with that?" If patients object, honor it immediately.

- Measure one thing. Do clinicians finish documentation significantly faster while feeling more present in sessions? If not obvious by day 10, move on.

This is how we piloted Plato MedScribe across 40+ mental health practices globally. No long contracts. Just immediate value.

FAQ

How much time does AI scribing save?

Pilots show 60-90 minutes saved daily. Seven patients at 20 minutes per note = 140 minutes on documentation. Good AI cuts this to 15-20 minutes of reviews. One psychiatrist went from 2 hours post clinic to 15 minutes total.

Does it work for emotionally complex sessions?

Only if trained specifically on therapeutic conversations. Standard medical AI achieves high accuracy on medical visits but fails on therapy language. Look for AI trained on mental health sessions, professional hardware for quiet speech, and platforms supporting multiple languages if needed.

Can it handle crisis situations?

Advanced systems flag risk content and format it clearly with warning signs, protective factors, safety plan details, and clinical judgment. Standard medical AI buries this in paragraphs. Ask vendors: "Show me risk assessment documentation." If they can't demonstrate structured output, disqualify them.

What about privacy compliance?

Your solution must meet: HIPAA compliance (US), GDPR (EU), regional requirements, data residency clarity, encryption, no permanent audio storage, and access limited to treating clinician. Ask: "Where is data processed? How long is audio stored? Who can access it?" Vague answers = red flags.

How do I choose the right solution?

Prioritize: Full presence with patients, clear privacy explanation, understanding of therapeutic process, multilingual capability if needed, structured risk documentation. Run a two-week pilot. If clinicians don't feel more connected to patients and leave earlier, it's not working.

The Real Goal: Protecting the Therapeutic Work

Nobody trains for years in clinical psychology to become a medical transcriptionist. You became a therapist because sitting with another human in their pain felt like work worth doing. Every hour spent typing is an hour not doing that work.

The right AI scribe doesn't change your therapy. It protects the space where therapy happens.

When you stop thinking "How will I document this?" and start thinking "They're finally ready to talk about what really happened" the technology has succeeded by disappearing completely.

See how Plato MedScribe works for mental health professionals

Book a 5-minute demo or try free for 14 days. If you're not finishing earlier and feeling more present, you don't pay.

Schedule Demo | Start Free Trial